Walkthrough of Kubernetes Ecosystem: Pods,Deployment,Service,Ingress.

A foundation for Kubernetes, Part-1.

About Me:

Hey Folks! I'm Vishwa, a DevOps and OpenSource enthusiast. I am currently doing LearnInPublic and BuildInPublic, where I'll be documenting my entire journey on LinkedIn and Twitter to be accountable and to connect with many like-minded people to seek knowledge from them and help each other. In this blog, I'll delve deeper into Kubernetes. This journey enables effortless learning and experimentation. My goal is to simplify complex concepts for all. Without further delay, let's get into Part 1.

What is Kubernetes?

- It is a tool which helps us to deploy Containerized applications.

- The term Orchestration defines it Manages the Containers.

Before Kubernetes?

- People used to do Manual Scaling and it was time consuming.

- Lack of resource optimization for the Containerized applications.

Problems Kubernetes Addresses?

- Auto healing is one of the main advantage of using Kubernetes, If a Container goes down then K8S will find that and deploy the container again without any manual intervensions.

- Auto Scaling is an another advantage of K8S, when a Container receives numurous load then K8S will spin a new container automatically.

Vocabulories in K8S?

- You may find people are sharing about Cluster, Pods, Deployments, Services, Ingress and more. Lets figure out all those important things.

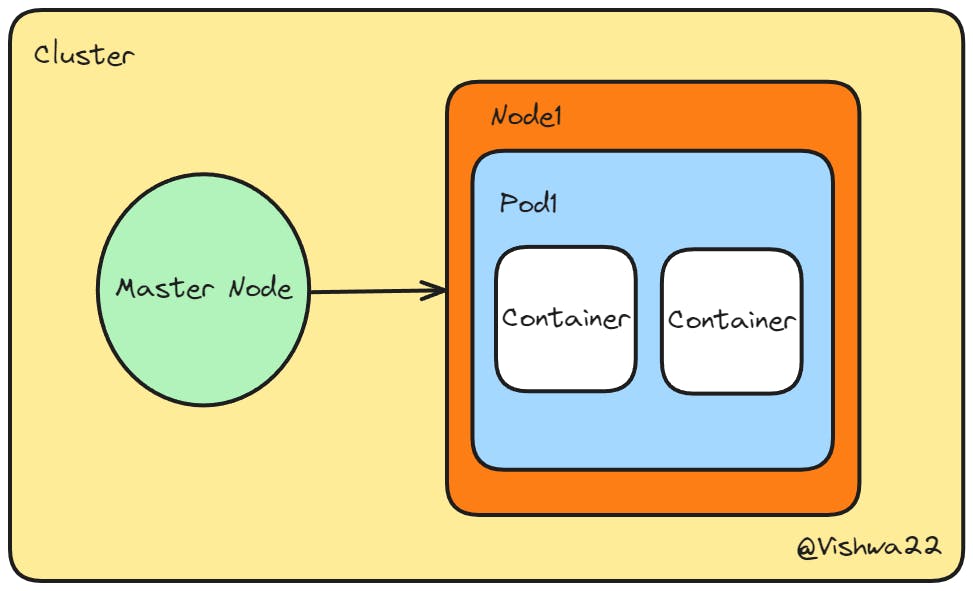

What is Cluster?

- A group of nodes that runs the containerized applications in an efficient way that helps with scalability and automation.

- Within the node of a cluster only we deploy the pods.

- These nodes can be of physical servers or virtiual machines.

Pod, What is it?

- A Pod is nothing but a group of one or more Containers deployed.

- It supports features like Shared Networking, Shared Resources.

- It is a YAML file which contains details about the Containerized application.

Deployment, What is this?

- It is wrapper of pod, we create a file and mention about Pod in it and this will be deployed.

- We won't deploy pod directly we use deployment to do it.

Why we use Deployment?

- Deploying pod doesn't help with Auto-Scaling and Auto-Healing, Deployment only helps to address those functionalities.

How it does the Scaling and Healing?

- Uses Replicaset, it is nothing but a Object we mention in a Deplyment file.

- Using deployments, you can simply Scale the number of pod replicas to meet changing demand.

- Self healing is the great advantage, Deployment ensures that the number pods mentioned in the replicas are up and running.

What is Services in K8S and Why we use it?

- Services helps us to expose our application to the outside world.

- There are three types in services Cluster IP, NodePort Mode and Load balancer type.

Serivce Types Defined:

- Using the Loadbalancer type we can access the application from the outside world.

- Using NodePort mode we can access the application within our organization.

- Using ClusterIP we only access the application within the cluster that is if we run our cluster in the local machine then application can be accessed from the local browser only.

What is Ingress used for?

- Using services alone doesn't offer enterprise level LoadBalancing.

- With the help of Ingress it reduces the cost of the Static IPs created for each services.

Simple architecture for understanding:

- Master node is the who controls things in Worker nodes(Node 1).

- Master node holds several components in it to do so, those components will take care of identifying the free nodes and scheduling pods.

Conclusion:

I am grateful to all those who have read it. I hope reading it was enjoyable for you. Please let me know if you found it useful by leaving a comment, giving it a like, and forwarding it to your friends. Lets explore more about Creation of Pods and Deployment in the upcomming part. I want to thank everyone again.